Corresponding author: Masaaki Komatsu, maskomat@ncc.go.jp; Ryuji Hamamoto, rhamamot@ncc.go.jp

DOI: 10.31662/jmaj.2024-0203

Received: July 30, 2024

Accepted: August 17, 2024

Advance Publication: September 27, 2024

Published: January 15, 2025

Cite this article as:

Komatsu M, Teraya N, Natsume T, Harada N, Takeda K, Hamamoto R. Clinical Application of Artificial Intelligence in Ultrasound Imaging for Oncology. JMA J. 2025;8(1):18-25.

Ultrasound (US) imaging is a widely used tool in oncology because of its noninvasiveness and real-time performance. However, its diagnostic accuracy can be limited by the skills of the examiner when performing manual scanning and by the presence of acoustic shadows that degrade image quality. Artificial intelligence (AI) technologies can support examiners in cancer screening and diagnosis by addressing these limitations. Here, we examine recent advances in AI research and development for US imaging in oncology. Breast cancer has been the most extensively studied cancer, with research predominantly focusing on tumor detection, differentiation between benign and malignant lesions, and prediction of lymph node metastasis. The American College of Radiology developed a medical imaging reporting and data system for various cancers that is often used to evaluate the accuracy of AI models. We will also explore the application of AI in clinical settings for US imaging in oncology. Despite progress, the number of approved AI-equipped software as medical devices for US imaging remains limited in Japan, the United States, and Europe. Practical issues that need to be addressed for clinical application include domain shifts, black boxes, and acoustic shadows. To address these issues, advances in image quality control, AI explainability, and preprocessing of acoustic shadows are essential.

Key words: ultrasound imaging, oncology, artificial intelligence, SaMD, domain shift, explainability, acoustic shadow

Advancements in artificial intelligence (AI) technologies have significantly influenced the medical field, particularly oncology, facilitating research and the development of personalized medicine (1), (2), (3), (4), (5). The Food and Drug Administration (FDA) listed AI- and machine learning (ML)-enabled medical devices marketed in the United States (6). By August 2024, the FDA had authorized 950 AI/ML-enabled medical devices, predominantly AI-equipped software as a medical device (AI-SaMD), for medical image diagnosis, such as computed tomography (CT), magnetic resonance imaging, and X-rays. In Japan, AI-based products undergo a different approval process than those in the United States and must be reviewed and approved by the Pharmaceuticals and Medical Devices Agency (PMDA). A dedicated SaMD review office was established within the PMDA in 2021. As of July 2024, PMDA-approved AI-SaMDs for medical imaging are mainly related to endoscopy and CT, and only 2 of 27 (7.4%) are for ultrasound (US) imaging (https://www.pmda.go.jp/PmdaSearch/kikiSearch/, accessed July 25, 2024).

US imaging is simple, noninvasive, and operates in real time compared with other medical imaging modalities. Advances in US technology, such as three- (3D) and four-dimensional (4D) imaging, portable devices, and point-of-care applications, have expanded their use across various medical fields, including oncology. However, the manual nature of US image acquisition can lead to variability in viewpoints, cross sections, and diagnostic accuracy, which are heavily dependent on the skills of the examiner. Acoustic shadows can degrade the image quality, which reduces diagnostic reliability. AI can address these issues and enhance image quality (7), (8), (9), (10), (11), but its limitations, such as reduced resolution, artifacts, and manual scanning, also make analysis difficult (12). Among the 950 FDA-approved devices, only 59 (6.2%) were related to US. Similarly, in Europe, only 10 (4.7%) of the 213 Conformité Européenne-marked AI-SaMDs are related to US (https://radiology.healthairegister.com/, accessed July 29, 2024) (Table 1) (13). Furthermore, to use AI-SaMD in clinical practice, ethical issues, such as patient consent and diagnostic responsibility, must be considered. This review explores the current progress and future directions of AI research and development in US imaging for oncology.

Table 1. List of AI-Based Ultrasound SaMDs Available in Europe (CE Marked).

| No. | Product name (company) | Subspeciality | Description | Regulatory certification | On market since | |

|---|---|---|---|---|---|---|

| CE | FDA | |||||

| 1 | LVivo Toolbox - Cardiac (DiA Imaging Analysis) | Cardiac | An AI echocardiographic analysis solution that provides objective echocardiographic image analysis aimed at reducing the challenges associated with manually capturing and visually analyzing ultrasound images | Certified, Class IIa, MDD | 510(k) cleared, Class II | 2013 |

| 2 | QVCAD (QView Medical) | Breast | An ANN-based system used as an adjunct to radiologists conducting breast screening exams for women who have negative mammogram results but dense breast tissue and therefore undergo 3D breast ultrasound (ABUS) | Certified, Class unknown | PMA approved, Class III | 09-2016 |

| 3 | AmCAD-UTⓇ (AmCad BioMed) | Head and neck | An AI solution that quantifies and visualizes ultrasound image features to help physicians make informed decisions | Certified, Class IIa, MDD | 510(k) cleared, Class II | 2017 |

| 4 | EchoGo Core (Ultromics) | Cardiac | An automated solution for cardiovascular findings to calculate ejection fraction, global longitudinal strain, and left ventricular volume | Certified, Class I, MDD | 510(k) cleared, Class II | 11-2019 |

| 5 | EchoGo Pro (Ultromics) | Cardiac | An outcome-based AI system to predict coronary artery disease | Certified, Class I, MDD | 510(k) cleared, Class II | 03-2020 |

| 6 | LVivo Bladder (DiA Imaging Analysis) | Bladder | A solution that transforms any on-premise ultrasound device into an AI-powered bladder scanner to accurately measure bladder capacity with a single click | Certified, Class IIa, MDD | 510(k) cleared, Class II | 06-2020 |

| 7 | TRACE4OC (DeepTrace Technologies Srl) | Ovary | A decision support system based on radiomics and machine learning to predict the malignant risk of ovarian mass from transvaginal ultrasonography and serum CA-125 levels | Certified, Class I, MDD | - | 05-2021 |

| 8 | Koios DS (Koios Medical, Inc) | Breast and thyroid | An AI-based software application designed to help trained readers analyze breast and thyroid ultrasound images | Certified, Class IIb, MDR | 510(k) cleared, Class II | 12-2021 |

| 9 | Ligence Heart (Ligence) | Cardiac | A platform that reads DICOM format data, recognizes image views and cardiac cycles, performs measurements, and generates reports based on the findings | Certified, Class IIa, MDR | - | 04-2022 |

| 10 | Us2.v1 (Us2.AI) | Cardiac | A machine learning-based solution that analyzes and interprets echocardiogram and ultrasound images of the heart to generate patient reports and is available on mobile, on-premises, and cloud-based platforms | Certified, Class IIb, MDR | 510(k) cleared, Class II | 06-2022 |

| Abbreviations: AI, artificial intelligence; SaMD, software as a medical device; CE, Conformité Européenne; FDA, Food and Drug Administration; ANN, artificial neural network; MDD, medical device directive; MDR, medical device regulation. | ||||||

PubMed was searched for relevant studies published in the last 5 years using the following search strategy: (“ultrasound”) and (“cancer”) and (“artificial intelligence” or “machine learning” or “deep learning”) with an access date of July 25, 2024. Cancers are listed according to the number of published studies.

Breast cancer remains the most extensively studied type in this field. Mammography (MG) is the primary screening tool, whereas US imaging is particularly useful for patients with dense breasts. The Breast Imaging (BI) Reporting and Data System (RADS) standardizes the interpretation and reporting of breast US. Yang et al. developed a deep learning (DL) model combining US and MG features that improved malignancy prediction in BI-RADS 4A breast lesions in patients with dense breasts (14). An AI system was developed to distinguish between the BI-RADS categories. The area under the receiver operating characteristic curve, sensitivity, and specificity were 0.95, 91.2%, and 90.7%, respectively. A comparison between the diagnostic accuracy of the 20 clinicians and the AI system showed that the AI system significantly outperformed the clinicians (p < 0.001) (15), leading to the PMDA approval of the AI-SaMD in 2024. Another retrospective reader study identifying breast cancer on US images revealed that the AI system achieved a higher area under the curve (AUC) than 10 board-certified breast radiologists (AI, 0.962; radiologists, 0.924), reducing false-positive rates by 37.3% and biopsy requests by 27.8% while maintaining sensitivity (16).

In a multimodal analysis incorporating US imaging and additional factors, a DL model was used to preoperatively differentiate between luminal and nonluminal early-stage breast cancers using US images and hematoxylin and eosin-stained biopsies (17). Accurate identification of axillary lymph node (ALN) involvement in patients with early-stage breast cancer is important for selecting appropriate treatment options. Combining DL radiomics from conventional US and shear wave elastography predicted ALN status between disease-free and metastatic axillae, with an AUC of 0.902. It also discriminated between low and high metastatic burdens of axillary disease, with an AUC of 0.905 (18). Guo et al. developed a multicenter DL radiomics model for predicting the risk of axillary nonsentinel lymph node involvement in primary breast cancer (19).

For triaging women with palpable breast lumps in low-resource settings, AI analyzed 758 masses in 300 women using portable US, categorizing them as benign, probably benign, suspicious, or malignant. This AI application to portable US images of breast masses accurately identified malignancies, with an AUC of 0.91 (20). Another AI system analyzed breast US images captured using a smartphone, delivering predictions in just 2 s and demonstrating 100% sensitivity and 97.5% specificity for malignant lesions (21). Given the shared imaging features between the thyroid and breast, a previous study evaluated an AI computer-assisted diagnosis software originally developed for thyroid nodules in breast lesions; however, the findings indicated that an organ-specific approach would improve diagnostic performance (22).

Thyroid cancer is the second most extensively studied cancer in this field, partly because of its shared US imaging features with breast cancer. The American College of Radiology (ACR) developed the Thyroid Imaging (TI) RADS to categorize thyroid US. Several DL models have been developed to differentiate between benign and malignant calcified thyroid nodules (23), (24). For example, the Xception model achieved an AUC of 0.970 (25), and a multichannel Xception-based framework was proposed (26). The object detection model YOLOv3, combined with a nomogram, improved accuracy in the distinction between benign and malignant TI-RADS 4 thyroid nodules (27).

An ensemble DL model has shown superior accuracy compared with other thyroid classification methods and US radiologists (28). A multimodal model combining US and infrared thermal images (29) and advanced multimodal imaging using AI-optimized B-mode elastography and dynamic contrast US (30) have been proposed to aid diagnostic procedures. Radiomic features derived from US images and combined clinical data have proven effective in differentiating thyroid follicular carcinomas from adenomas (31).

A prospective multicenter study used US videos with multiscale, multiframe, and dual-direction DL models to preoperatively predict cervical lymph node metastasis in patients with papillary thyroid carcinoma (32). Furthermore, an integrated model incorporating DL, handcrafted radiomics, and clinical and US imaging features was used to diagnose lymph node metastasis in patients with papillary thyroid cancer (33).

Liver cancer ranks among the top five causes of cancer-related deaths in 90 countries, with cases expected to rise over the next two decades (34). One study demonstrated the ability of AI in the detection of focal liver lesions in US videos (35). Comparing tracking algorithms from the OpenCV library for liver tumors in US videos, a correlation filter tracker with channel and spatial reliability and a modified tracker demonstrated over 70% Intersection over Union and >85% successful searches in real-time processing (36). Using a large-scale dataset of 50,063 US images and video frames from 11,468 patients, Xu et al. developed a fully automated AI pipeline that mimics the workflow of radiologists in the detection of liver masses and in diagnosing liver malignancy (37). The AI models outperformed human experts in distinguishing between four types of tumors (cysts, hemangiomas, hepatocellular carcinoma, and metastatic tumors) as well as their benign or malignant classification (38). Moreover, the segmentation of blood vessels in liver US images can be an automatic CT registration pipeline to guide laparoscopic liver resection (39).

Pancreatic cancer has the poorest prognosis, with a 5-year survival rate of 11% (40). The use of endoscopic US (EUS) for diagnosis and treatment has gained attention. EUS-guided procedures enable early cancer detection and minimally invasive palliative or antitumor treatment, improving the patients’ quality of life. YOLOv5 effectively differentiates pancreatic cancer from nonpancreatic cancer lesions in real time using EUS images (41). Automatic segmentation of pancreatic tumors using U-Net on contrast-enhanced EUS video images was associated with good concordance (42). A DL-based system using deep convolutional neural networks and random forest algorithms can facilitate pancreatic mass diagnosis and EUS-guided fine-needle aspiration in real time (43). Zhang et al. developed a system for EUS training and quality control using ResNet for image classification and U-Net++ for image segmentation, achieving 90.0% accuracy in classification and 0.770 and 0.813 dice in blood vessel and pancreas segmentation, respectively. These results are comparable with those obtained by experts (44). An ML model predicted the clinical diagnosis of chronic pseudotumoral pancreatitis, neuroendocrine tumors, and ductal adenocarcinoma with an AUC of 0.98 and overall accuracy of 98.3% (45).

Ovarian cancer is the leading cause of gynecological cancer-related deaths worldwide; however, screening methods have not been established. Previous trials combining cancer antigen testing with transvaginal ultrasonography did not significantly reduce mortality (46). A DL radiomics model was developed to differentiate between benign, borderline, and malignant ovarian tumors using US imaging (47). US image analysis using an ensemble of deep neural networks predicted ovarian malignancy with diagnostic accuracy comparable with that of experts (48). A DL radiomics nomogram based on US imaging accurately predicted the malignancy risk of ovarian tumors, which is consistent with the guidelines for the ovarian adnexal RADS published by the ACR (49). OvcaFinder was developed as an interpretable model that combines US image-based DL predictions, ovarian adnexal RADS scores, and routine clinical variables (50). Gao et al. conducted a retrospective, multicenter study using a large dataset to develop a deep convolutional neural network model for the automated evaluation of US images, which significantly enhanced the accuracy and sensitivity of diagnosis compared with radiologists alone. Further prospective studies or randomized clinical trials are required to confirm these findings (51).

Several AI studies using US imaging have been conducted for various cancers, beyond those mentioned. One study investigated a 3D DL model for segmenting tongue carcinomas using 3D US volumes during surgery (52). DL models can also automatically classify submucosal tumors in esophageal EUS images while identifying invasion depth and lesion origin (53). For prostate cancer, a two-dimensional U-Net model achieved accurate segmentation of the clinical target volume corresponding to the prostate on 3D transrectal US images with brachytherapy-implanted needles (54). The well-trained EfficientNet B4 model was used to evaluate benign or malignant US skin tumor images and was shown to be at least as accurate as an experienced dermatologist in skin US diagnosis (55). Additionally, using 1,102 transvaginal US images from 796 patients with cervical cancer, U-Net-based automatic segmentation achieved high accuracy in delineating cervical cancer target areas (56). Furthermore, convolutional neural network -based DL models achieved an AUC of 0.85 for diagnosing rectal cancer using endoanal US (57).

DL technology excels at image recognition and is extensively used in AI-SaMDs for medical image diagnosis. However, DL requires large amounts of training data, and clinical data are often limited. Collecting data from multiple facilities can lead to domain shifts, where the training and test data distributions do not match across different facilities or machines, resulting in reduced model performance. To address this, some solutions have been proposed, such as federated learning in a distributed dataset environment and domain adaptation and fine-tuning for multicenter and multimachine data using trained models (58), (59).

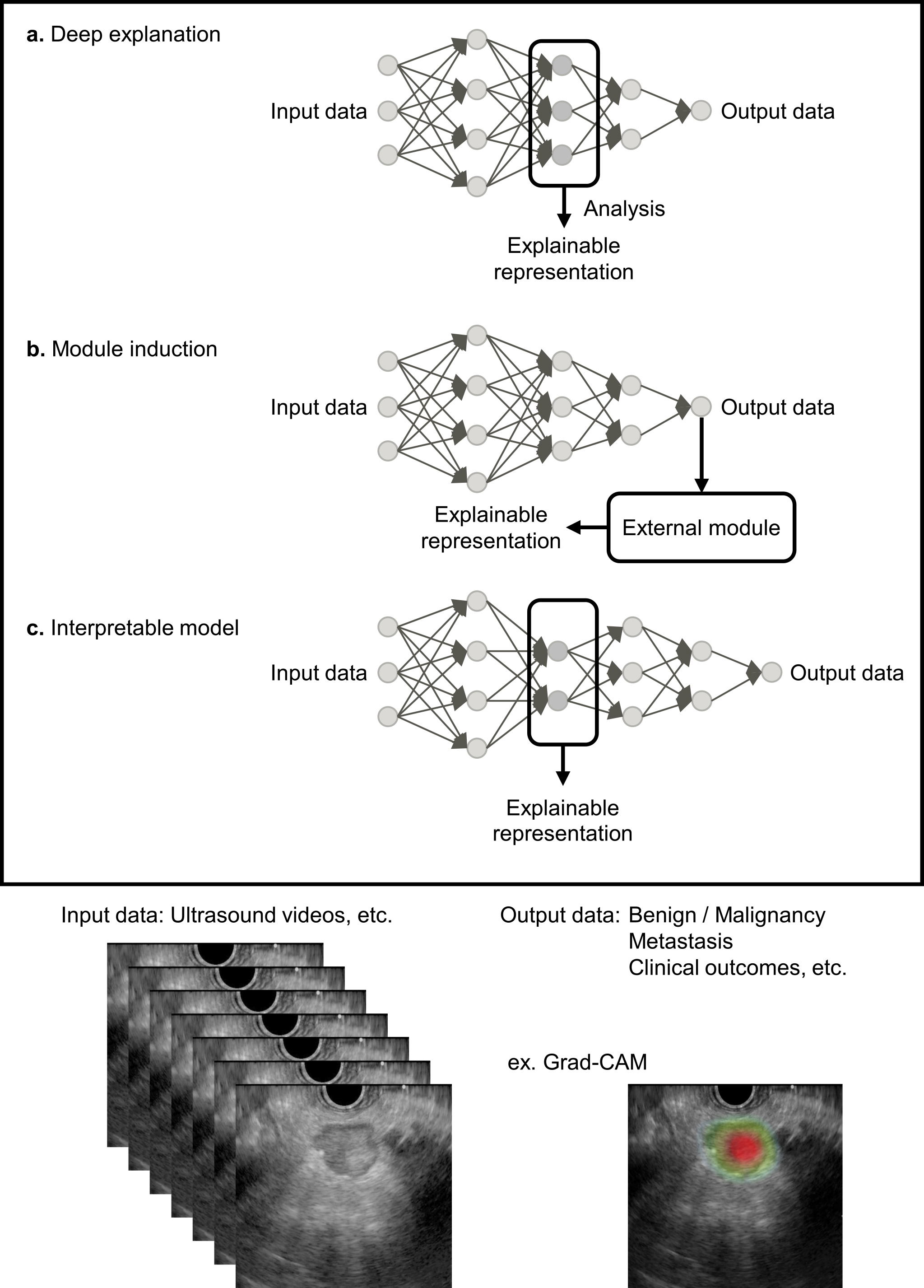

The black box problem arises because humans cannot fully understand the decision-making process of DL models with complex convolutional neural network structures. Improving AI explainability is essential for medical professionals who use AI-based medical devices and for patients to provide informed consent based on diagnostic results (60). Countermeasures for the black box problem include visualizing the internal behavior of the model (deep explanation), adding an external explanation module to the model (module induction), and using other explainable representations (interpretable model) (Figure 1) (61), (62). A representative deep explanation method is a heat map display using gradient-weighted class activation mapping (Grad-CAM) (63). Grad-CAM uses the gradients of any target concept in the final convolutional layer to produce a coarse localization map that highlights important regions in the US image. Examples of interpretable models include barcodes and graph charts that display target area detection results in US videos (9), (11).

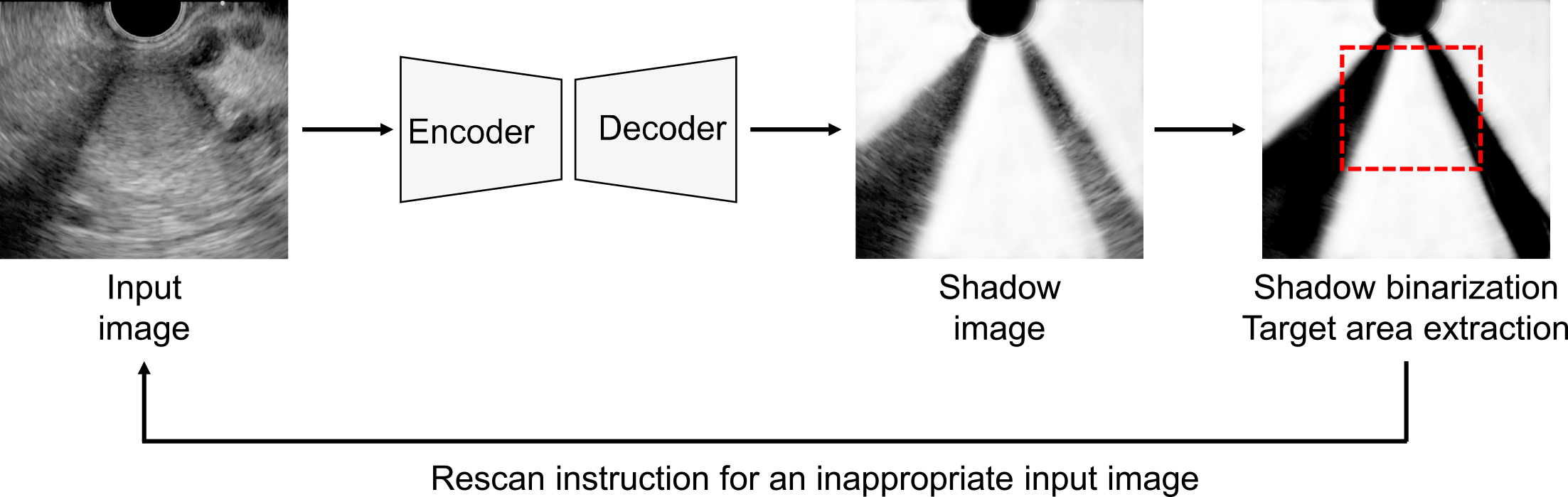

Acoustic shadows are common artifacts that hinder the diagnosis of target organs in US and AI-based image recognition. Conventional AI-based methods for detecting acoustic shadows involve labor-intensive pixel-level annotation of semitransparent, blurred boundaries. To streamline this process, weakly supervised learning methods have been developed that use artificial shadows superimposed on US images as pseudo-labels for training models (64). Chen et al. proposed a semisupervised shadow-aware network with boundary refinement, which enhances the accuracy of shadow detection (65). These methods improve anomaly detection by automatically evaluating the effect of acoustic shadows, thereby guiding examiners to rescan problematic images (Figure 2). Additionally, a vendor-specific approach uses an ML model trained with preimaging data to differentiate between diagnostic noise and useful speckle signals, effectively filtering out unnecessary noise in US images.

In this review, we examined AI-based advances in US imaging within the field of oncology. Most studies have focused on tumor detection, differentiation between benign and malignant lesions, and prediction of lymph node metastasis, which aligns with clinical needs. Despite these advances, clinical implementation remains limited. The market for AI/ML-enabled medical devices is expected to grow due to increasing innovation. Future applications of AI in medicine are anticipated to enhance operational efficiency and support the development of infrastructures such as remote healthcare and digital medical databases. To successfully integrate AI into clinical US imaging for oncology, it is crucial to address and resolve practical challenges such as domain shifts, explainability, and acoustic shadows.

None

This work was supported by the Cabinet Office BRIDGE (programs for bridging the gap between R&D and the ideal society (Society 5.0) and generating economic and social value) and the MEXT subsidy for the Advanced Integrated Intelligence Platform.

We thank all the members of the Hamamoto Laboratory for their valuable discussions.

Not applicable.

Hamamoto R, Komatsu M, Takasawa K, et al. Epigenetics analysis and integrated analysis of multiomics data, including epigenetic data, using artificial intelligence in the era of precision medicine. Biomolecules. 2020;10(1):62.

Hamamoto R, Koyama T, Kouno N, et al. Introducing AI to the molecular tumor board: one direction toward the establishment of precision medicine using large-scale cancer clinical and biological information. Exp Hematol Oncol. 2022;11(1):82.

Hamamoto R, Suvarna K, Yamada M, et al. Application of artificial intelligence technology in oncology: towards the establishment of precision medicine. Cancers (Basel). 2020;12(12):3532.

Jinnai S, Yamazaki N, Hirano Y, et al. The development of a skin cancer classification system for pigmented skin lesions using deep learning. Biomolecules. 2020;10(8):1123.

Yamada M, Saito Y, Imaoka H, et al. Development of a real-time endoscopic image diagnosis support system using deep learning technology in colonoscopy. Sci Rep. 2019;9(1):14465.

U.S. Food and Drug Administration (FDA). Artificial intelligence and machine learning (AI/ML)-enabled medical devices [Internet]. [cited 2024 Aug 10]. Available from: https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-aiml-enabled-medical-devices.

Dozen A, Komatsu M, Sakai A, et al. Image segmentation of the ventricular septum in fetal cardiac ultrasound videos based on deep learning using time-series information. Biomolecules. 2020;10(11):1526.

Shozu K, Komatsu M, Sakai A, et al. Model-agnostic method for thoracic wall segmentation in fetal ultrasound videos. Biomolecules. 2020;10(12):1691.

Komatsu M, Sakai A, Komatsu R, et al. Detection of cardiac structural abnormalities in fetal ultrasound videos using deep learning. Applied Sciences. 2021;11(1):371.

Ono S, Komatsu M, Sakai A, et al. Automated endocardial border detection and left ventricular functional assessment in echocardiography using deep learning. Biomedicines. 2022;10(5):1082.

Sakai A, Komatsu M, Komatsu R, et al. Medical professional enhancement using explainable artificial intelligence in fetal cardiac ultrasound screening. Biomedicines. 2022;10(3):551.

Komatsu M, Sakai A, Dozen A, et al. Towards clinical application of artificial intelligence in ultrasound imaging. Biomedicines. 2021;9(7):720.

van Leeuwen KG, Schalekamp S, Rutten MJ, et al. Artificial intelligence in radiology: 100 commercially available products and their scientific evidence. Eur Radiol. 2021;31(6):3797-804.

Yang Y, Zhong Y, Li J, et al. Deep learning combining mammography and ultrasound images to predict the malignancy of BI-RADS US 4A lesions in women with dense breasts: a diagnostic study. Int J Surg. 2024;110(5):2604-13.

Hayashida T, Odani E, Kikuchi M, et al. Establishment of a deep-learning system to diagnose BI-RADS4a or higher using breast ultrasound for clinical application. Cancer Sci. 2022;113(10):3528-34.

Shen Y, Shamout FE, Oliver JR, et al. Artificial intelligence system reduces false-positive findings in the interpretation of breast ultrasound exams. Nat Commun. 2021;12(1):5645.

Huang Y, Yao Z, Li L, et al. Deep learning radiopathomics based on preoperative US images and biopsy whole slide images can distinguish between luminal and non-luminal tumors in early-stage breast cancers. EBioMedicine. 2023;94:104706.

Zheng X, Yao Z, Huang Y, et al. Deep learning radiomics can predict axillary lymph node status in early-stage breast cancer. Nat Commun. 2020;12(1):4370.

Guo X, Liu Z, Sun C, et al. Deep learning radiomics of ultrasonography: identifying the risk of axillary non-sentinel lymph node involvement in primary breast cancer. EBioMedicine. 2020;60:103018.

Berg WA, López Aldrete AL, Jairaj A, et al. Toward AI-supported US triage of women with palpable breast lumps in a low-resource setting. Radiology. 2023;307(4):e223351.

Mori R, Okawa M, Tokumaru Y, et al. Application of an artificial intelligence-based system in the diagnosis of breast ultrasound images obtained using a smartphone. World J Surg Oncol. 2024;22(1):2.

Lee SE, Lee E, Kim EK, et al. Application of artificial intelligence computer-assisted diagnosis originally developed for thyroid nodules to breast lesions on ultrasound. J Digit Imaging. 2022;35(6):1699-707.

Zheng Z, Su T, Wang Y, et al. A novel ultrasound image diagnostic method for thyroid nodules. Sci Rep. 2023;13(1):1654.

Xu D, Wang Y, Wu H, et al. An artificial intelligence ultrasound system's ability to distinguish benign from malignant follicular-patterned lesions. Front Endocrinol (Lausanne). 2022;13:981403.

Chen C, Liu Y, Yao J, et al. Deep learning approaches for differentiating thyroid nodules with calcification: a two-center study. BMC Cancer. 2023;23(1):1139.

Zhang X, Lee VCS, Rong J, et al. Multi-channel convolutional neural network architectures for thyroid cancer detection. PLoS One. 2022;17(1):e0262128.

Zhang X, Jia C, Sun M, et al. The application value of deep learning-based nomograms in benign-malignant discrimination of TI-RADS category 4 thyroid nodules. Sci Rep. 2024;14(1):7878.

Wei X, Gao M, Yu R, et al. Ensemble deep learning model for multicenter classification of thyroid nodules on ultrasound images. Med Sci Monit. 2020;26:e926096.

Zhang N, Liu J, Jin Y, et al. An adaptive multi-modal hybrid model for classifying thyroid nodules by combining ultrasound and infrared thermal images. BMC Bioinformatics. 2023;24(1):315.

Jung EM, Stroszczynski C, Jung F. Advanced multimodal imaging of solid thyroid lesions with artificial intelligence-optimized B-mode, elastography, and contrast-enhanced ultrasonography parametric and with perfusion imaging: initial results. Clin Hemorheol Microcirc. 2023;84(2):227-36.

Yu B, Li Y, Yu X, et al. Differentiate thyroid follicular adenoma from carcinoma with combined ultrasound radiomics features and clinical ultrasound features. J Digit Imaging. 2022;35(5):1362-72.

Zhang MB, Meng ZL, Mao Y, et al. Cervical lymph node metastasis prediction from papillary thyroid carcinoma US videos: a prospective multicenter study. BMC Med. 2024;22(1):153.

Gao Y, Wang W, Yang Y, et al. An integrated model incorporating deep learning, hand-crafted radiomics and clinical and US features to diagnose central lymph node metastasis in patients with papillary thyroid cancer. BMC Cancer. 2024;24(1):69.

Rumgay H, Arnold M, Ferlay J, et al. Global burden of primary liver cancer in 2020 and predictions to 2040. J Hepatol. 2022;77(6):1598-606.

Tiyarattanachai T, Apiparakoon T, Marukatat S, et al. The feasibility to use artificial intelligence to aid detecting focal liver lesions in real-time ultrasound: a preliminary study based on videos. Sci Rep. 2022;12(1):7749.

Levin AA, Klimov DD, Nechunaev AA, et al. Assessment of experimental OpenCV tracking algorithms for ultrasound videos. Sci Rep. 2023;13(1):6765.

Xu Y, Zheng B, Liu X, et al. Improving artificial intelligence pipeline for liver malignancy diagnosis using ultrasound images and video frames. Brief Bioinform. 2023;24(1):bbac569.

Nishida N, Yamakawa M, Shiina T, et al. Artificial intelligence (AI) models for the ultrasonographic diagnosis of liver tumors and comparison of diagnostic accuracies between AI and human experts. J Gastroenterol. 2022;57(4):309-21.

Montaña-Brown N, Ramalhinho J, Allam M, et al. Vessel segmentation for automatic registration of untracked laparoscopic ultrasound to CT of the liver. Int J Comput Assist Radiol Surg. 2021;16(7):1151-60.

Storz P. Earliest metabolic changes associated with the initiation of pancreatic cancer. Cancer Res. 2024;84(14):2225-6.

Tian G, Xu D, He Y, et al. Deep learning for real-time auxiliary diagnosis of pancreatic cancer in endoscopic ultrasonography. Front Oncol. 2022;12:973652.

Iwasa Y, Iwashita T, Takeuchi Y, et al. Automatic segmentation of pancreatic tumors using deep learning on a video image of contrast-enhanced endoscopic ultrasound. J Clin Med. 2021;10(16):3589.

Tang A, Tian L, Gao K, et al. Contrast-enhanced harmonic endoscopic ultrasound (CH-EUS) MASTER: a novel deep learning-based system in pancreatic mass diagnosis. Cancer Med. 2023;12(7):7962-73.

Zhang J, Zhu L, Yao L, et al. Deep learning-based pancreas segmentation and station recognition system in EUS: development and validation of a useful training tool (with video). Gastrointest Endosc. 2020;92(4):874-85.e3.

Udristoiu AL, Cazacu IM, Gruionu LG, et al. Real-time computer-aided diagnosis of focal pancreatic masses from endoscopic ultrasound imaging based on a hybrid convolutional and long short-term memory neural network model. PLoS One. 2021;16(6):e0251701.

Ishizawa S, Niu J, Tammemagi MC, et al. Estimating sojourn time and sensitivity of screening for ovarian cancer using a Bayesian framework. J Natl Cancer Inst. 2024. doi:10.1093/jnci/djae145

Du Y, Guo W, Xiao Y, et al. Ultrasound-based deep learning radiomics model for differentiating benign, borderline, and malignant ovarian tumours: a multi-class classification exploratory study. BMC Med Imaging. 2024;24(1):89.

Christiansen F, Epstein EL, Smedberg E, et al. Ultrasound image analysis using deep neural networks for discriminating between benign and malignant ovarian tumors: comparison with expert subjective assessment. Ultrasound Obstet Gynecol. 2021;57(1):155-63.

Du Y, Xiao Y, Guo W, et al. Development and validation of an ultrasound-based deep learning radiomics nomogram for predicting the malignant risk of ovarian tumours. Biomed Eng Online. 2024;23(1):41.

Xiang H, Xiao Y, Li F, et al. Development and validation of an interpretable model integrating multimodal information for improving ovarian cancer diagnosis. Nat Commun. 2024;15(1):2681.

Gao Y, Zeng S, Xu X, et al. Deep learning-enabled pelvic ultrasound images for accurate diagnosis of ovarian cancer in China: a retrospective, multicentre, diagnostic study. Lancet Digit Health. 2022;4(3):e179-87.

Bekedam NM, Idzerda LHW, van Alphen MJA, et al. Implementing a deep learning model for automatic tongue tumour segmentation in ex-vivo 3-dimensional ultrasound volumes. Br J Oral Maxillofac Surg. 2024;62(3):284-9.

Liu GS, Huang PY, Wen ML, et al. Application of endoscopic ultrasonography for detecting esophageal lesions based on convolutional neural network. World J Gastroenterol. 2022;28(22):2457-67.

Hampole P, Harding T, Gillies D, et al. Deep learning-based ultrasound auto-segmentation of the prostate with brachytherapy implanted needles. Med Phys. 2024;51(4):2665-77.

Laverde-Saad A, Jfri A, García R, et al. Discriminative deep learning based benignity/malignancy diagnosis of dermatologic ultrasound skin lesions with pretrained artificial intelligence architecture. Skin Res Technol. 2022;28(1):35-9.

Jin J, Zhu H, Teng Y, et al. The accuracy and radiomics feature effects of multiple U-net-based automatic segmentation models for transvaginal ultrasound images of cervical cancer. J Digit Imaging. 2022;35(4):983-92.

Carter D, Bykhovsky D, Hasky A, et al. Convolutional neural network deep learning model accurately detects rectal cancer in endoanal ultrasounds. Tech Coloproctol. 2024;28(1):44.

Zhao S, Yue X, Zhang S, et al. A review of single-source deep unsupervised visual domain adaptation. IEEE Trans Neur Net Lear. 2022;33(2):473-93.

Takahashi S, Takahashi M, Kinoshita M, et al. Fine-tuning approach for segmentation of gliomas in brain magnetic resonance images with a machine learning method to normalize image differences among facilities. Cancers (Basel). 2021;13(6):1415.

Kundu S. AI in medicine must be explainable. Nat Med. 2021;27(8):1328.

Das A, Rad P. Opportunities and challenges in explainable artificial intelligence (xai): a survey. arXiv. 2020:2006.11371.

Lauritsen SM, Kristensen M, Olsen MV, et al. Explainable artificial intelligence model to predict acute critical illness from electronic health records. Nat Commun. 2020;11(1):3852.

Selvaraju RR, Cogswell M, Das A, et al. Grad-CAM: visual explanations from deep networks via gradient-based localization. Int J Comput Vis. 2020;128:336-59.

Yasutomi S, Arakaki T, Matsuoka R, et al. Shadow estimation for ultrasound images using auto-encoding structures and synthetic shadows. Applied Sciences. 2021;11(3):1127.

Chen F, Chen L, Kong W, et al. Deep semi-supervised ultrasound image segmentation by using a shadow aware network with boundary refinement. IEEE Trans Med Imag. 2023;42(12):3779-93.